AI grounding connects language models to verifiable data sources. When a grounded model generates an answer, it cites specific sources, instead of just pulling from training data. Without grounding, models fabricate facts that sound plausible.

The consequences of ungrounded AI can escalate quickly. Think about highly-regulated industries, for example. Fabricated medical dosages, invented legal precedents, and outdated financial rules can trigger compliance failures and liability exposure. AI grounding mitigates these risks, creates audit trails for every claim, and improves decision accuracy through verifiable facts.

In this article, we'll explore how grounding shifts AI from probabilistic text generation to evidence-based reasoning, and the practical implementations now widely adopted across enterprise infrastructures.

How AI Grounding Works

Traditional LLMs generate outputs from training data patterns—and can’t access real-time information without architectural modifications. They optimize for plausibility instead of the truth, predicting words by calculating probabilities from learned patterns, with no fact-checking involved. Grounding interrupts this by injecting real-time evidence, forcing the model to anchor claims in retrievable information rather than fabricating plausible-sounding answers.

Step-by-Step AI Grounding Breakdown

Grounded AI systems work in three steps:

1. Context Retrieval and Injection

The grounding process starts when your query enters the system. The LLM converts your query into a semantic vector that captures its meaning. The system pulls top-matching passages from documents, databases, or knowledge graphs, then combines them with your original query. This enriched prompt fills in the context that the model lacks from training.

2. Data Selection and Integration

Grounded systems pull information from multiple sources, each with different characteristics:

- Internal sources include proprietary documents (contracts, policies, product specs), enterprise databases, and company wikis–information competitors can't access.

- External sources span public web content, regulatory databases, and premium data services for broader context and current information.

- Structured data from SQL databases, knowledge graphs, and API endpoints delivers precise, factual information with defined relationships.

- Unstructured content from documents, emails, and web pages requires semantic processing to extract relevant passages.

Vector indexing handles unstructured content, API connectors handle structured data. Update schedules vary as transaction data refreshes in real-time, while documents refresh nightly.

3. Reasoning and Response Generation

With context assembled, the model generates responses grounded in retrieved information. It reasons over both question and evidence, producing outputs that reference source material. Each statement links back to specific documents for verification.

Systems balance reasoning depth against speed through two levers: context volume and model choice. Customer chat needs fast models with less context. Research tools need deeper reasoning with more evidence.

For high-stakes domains like healthcare or legal analysis, human reviewers should validate outputs before delivery. These expert reviews feed back into the system, improving future responses through retrieval weight adjustments rather than model retraining.

Popular Grounding Techniques

AI Grounding techniques fall into five main approaches, each with different trade-offs for integration complexity and update frequency.

- Retrieval-augmented generation (RAG) powers most enterprise deployments. The system embeds your question, searches knowledge stores for matching content, and then provides both query and evidence to the model. Information stays current without model retraining.

- In-context learning embeds specific examples and reference information directly in prompts. By including authoritative statements or demonstrations within the prompt itself, models align outputs with approved data without architecture changes.

- Agentic grounding uses autonomous processes to select, verify, and combine information sources. Agents strategically query multiple knowledge stores, validate results against each other, and chain specialized models to build more reliable answers.

- Fine-tuning adapts base models through continued training on domain-specific datasets. This embeds knowledge into model weights, but you're stuck retraining whenever information changes.

- Few-shot learning guides model behavior through carefully selected examples in the prompt. This technique steers outputs toward desired patterns without modifying model weights.

Most production systems combine multiple techniques. RAG for real-time accuracy, fine-tuning for domain expertise, and in-context learning for quick adjustments without redeployment.

Why Enterprises Need Grounded AI

Enterprises face a problem: language models are powerful, but accuracy is paramount when it comes to business-critical decisions. Grounded systems address this gap through seven benefits.

- Better decisions: Executives verify evidence before acting instead of trusting black-box outputs.

- Compliance made manageable: Audit trails link every AI statement to source documents, critical for regulated industries.

- Hallucinations reduced: Every claim anchors to retrievable sources rather than fabricated patterns.

- Answers that fit your business: Grounded systems incorporate your proprietary knowledge, not just public data competitors also access.

- Security at the source: Access controls operate at the retrieval layer, so sensitive data never reaches the model without proper authorization.

- Fix information, not models: Update knowledge sources directly instead of retraining models when facts change.

- No vendor lock-in: Model-agnostic architectures let you swap components as AI capabilities evolve.

Implementing grounded AI requires architectural choices around flexibility, integration complexity, and maintenance. Several approaches have emerged in the market, each with distinct trade-offs:

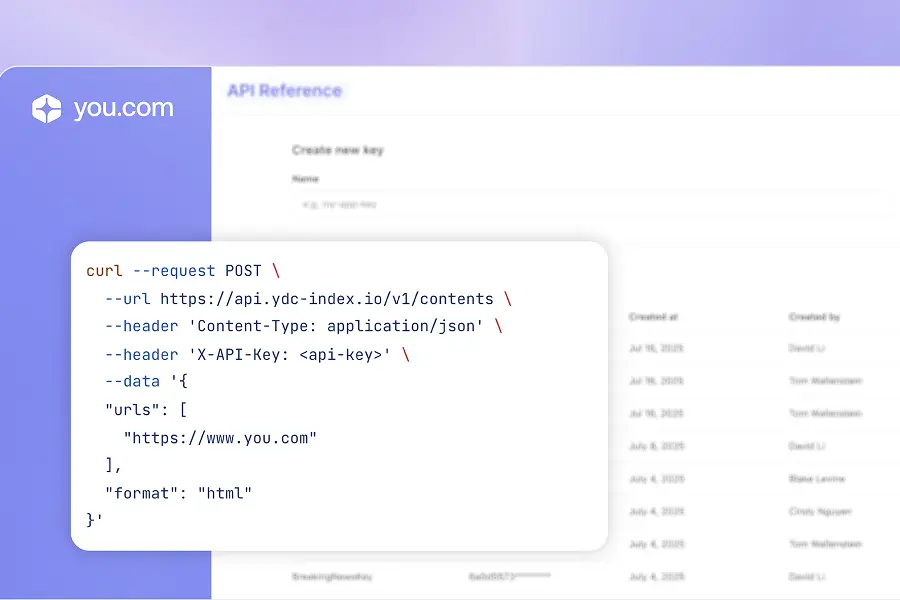

You.com, for example, uses vertical indexes for specific industries (legal, finance, research) with model-agnostic routing. Real-time web data combines with internal knowledge bases, zero data retention, and LLMs as interchangeable components. You avoid vendor lock-in as AI evolves.

Closed ecosystems lock you into specific data sources and models. Open platforms maintain flexibility across both data sources and models as AI evolves. Enterprises favor the latter.

How to Get Started: Choosing the Right Grounded AI Platform

Selecting and deploying a grounded AI platform means considering five factors:

- Performance accuracy directly impacts business outcomes by ensuring every generated response faithfully represents source material across your domain-specific questions.

- Architectural flexibility preserves your options as AI evolves rapidly by allowing model swapping without rebuilding applications.

- Enterprise security determines compliance readiness through SOC 2 certification, zero data retention policies, and identity controls that respect existing access boundaries.

- Vertical indexes provide domain-targeted knowledge sources for legal, healthcare, and retail contexts, enabling access to specialized data that generic search APIs lack.

- Deployment speed accelerates time-to-value through self-service implementation and pre-built connectors that reduce integration from months to weeks.

Once you’ve decided on your platform, it’s important to take strategic implementation steps:

- Inventory knowledge sources and classify them by sensitivity, update frequency, and access requirements to define your grounding architecture.

- Select a focused pilot with measurable impact: customer support efficiency, contract review accuracy, or research acceleration. Define success metrics before starting.

- Run a four-week pilot testing the system with a limited user group. Measure accuracy gains, time savings, and user satisfaction compared to existing workflows.

- Implement refresh pipelines to ensure knowledge sources stay current. This is a common failure point in grounded systems.

- Establish quarterly audits of citation accuracy and data health to maintain system reliability as your information landscape evolves.

Start Building with Grounded AI Infrastructure

Grounding changes how AI systems generate answers. Instead of predicting plausible text from training patterns, grounded models retrieve evidence from knowledge bases and cite their sources. This shift from probabilistic generation to evidence-based reasoning addresses the core limitation that prevents enterprise AI adoption: you can't verify outputs that don't link back to source material.

You.com addresses this with an enterprise AI search infrastructure that connects models to live web data, internal content, and structured sources. Every output includes source citations, and, if needed, the platform retains no data. This approach gives teams a consistent way to access, verify, and apply business-critical information, without requiring model retraining or compromising on traceability.

Frequently Asked Questions

1. How does grounding affect response latency?

Grounding adds a retrieval step before generation, which increases response time. Research shows retrieval accounts for 35-47% of time-to-first-token latency in production RAG systems. For customer-facing chat where milliseconds matter, this trade-off requires careful tuning: smaller knowledge bases, faster vector indexes, or tiered retrieval that skips deep search for simple queries. For research, compliance, or contract review where accuracy outweighs speed, the overhead is worth it. Most production systems offer configuration options to balance depth against latency based on use case.

2. Can grounded AI make mistakes?

Yes. Grounding anchors models to whatever sources you provide, which means bad sources produce bad outputs. If your knowledge base contains outdated policies, incorrect product specifications, or biased information, the model will cite those errors confidently. The model can't fact-check your sources, it can only retrieve and cite them. Regular source audits and version control on your knowledge bases become critical maintenance tasks, not nice-to-haves.

3. What are the biggest mistakes teams make when implementing grounding?

The most common error is treating grounding as a one-time setup instead of an ongoing maintenance task. Knowledge sources go stale fast. Teams also overload context windows with too much retrieved information, which slows responses and confuses models. Start with focused, high-quality sources rather than dumping every document into the retrieval system.

Another mistake is skipping human validation during pilots. Run expert reviews on 20-30 responses weekly to catch retrieval problems early, before they become embedded in production workflows. Finally, many teams ignore citation quality metrics until users complain. Track which sources the system actually uses versus which it should use. If your legal bot keeps citing marketing materials instead of contracts, your retrieval weights need adjustment.

4. In which industries or use cases is AI grounding most critical?

AI Grounding matters most in regulated industries where unverified outputs create liability exposure: healthcare (medication dosages), legal (case precedents), and finance (compliance rules). Any domain requiring audit trails benefits from grounding, including contract review, regulatory compliance, and strategic research where decisions depend on verifiable facts. Customer support and content moderation also need grounding when accuracy and accountability matter more than response speed.

5. How do I measure whether grounding is actually improving my AI outputs?

Track three metrics in your pilot: citation accuracy (what percentage of facts link to correct sources), retrieval precision (whether the system pulls relevant documents for each query), and answer correctness compared to expert validation. Run weekly spot checks where domain experts validate 20-30 responses against source documents. If citation accuracy drops below 90%, your retrieval system needs tuning. If answer correctness lags, you need better source coverage or different grounding techniques for that domain.

.webp)