TLDR: Semantic chunking splits text at topic boundaries rather than token counts by using embedding similarity to detect when adjacent sentences stop being related. It fixes specific retrieval problems well, but benchmarks show it isn't universally better than simpler approaches. Profile your pipeline before adding the overhead.

Why Chunking Strategy Affects Retrieval Quality

RAG pipelines don't run inference over entire documents. They retrieve the top-k most relevant chunks from a vector index and pass those as context to the LLM. How well those chunks preserve semantic coherence matters because each chunk gets encoded into a fixed-size embedding, and similarity search operates on those vectors. A chunk that splits mid-concept produces a degraded embedding that ranks poorly against relevant queries, even when the underlying content is a good match.

Consider a product manual that covers installation before transitioning into troubleshooting. Under fixed-size splitting, you'll sometimes produce one chunk whose trailing tokens cover installation and another whose opening tokens start the troubleshooting section without the setup those steps depend on. Both chunks generate embeddings that partially misrepresent their content, and neither retrieves reliably for queries that span the transition. That's the failure mode chunking strategy is meant to prevent.

How Semantic Chunking Works

Rather than splitting at every nth token, semantic chunking uses embedding similarity to find where adjacent sentences stop being related and places chunk boundaries there.

The algorithm runs in three stages:

- First, the document is segmented into sentences, and each sentence gets encoded into an embedding vector.

- The system then computes cosine similarity between consecutive sentence pairs across the document.

- When that similarity score drops sharply relative to surrounding pairs, the system infers a topic shift and inserts a chunk boundary at that position.

The similarity threshold isn't a fixed value applied uniformly across all documents. It's set at a configurable percentile of all pairwise scores within each document, typically between the 80th and 95th percentile, which makes boundary detection adaptive to each document's structure rather than requiring manual calibration per corpus. A dense technical spec where most sentences are tightly related will produce a different threshold than a report where topics shift more frequently.

One hard limitation worth knowing upfront: most open-source implementations use regex-based sentence segmentation built for English. For multilingual pipelines, you'll need language-specific tokenizers or a segmentation model that explicitly handles your target languages.

What the Research Actually Shows

To start, Qu et al. (2024) benchmarked semantic and fixed-size chunking across multiple domains and found the retrieval performance gap between methods was minimal. More importantly, the choice of embedding model had a larger measurable effect on retrieval quality than chunking strategy did. If you're iterating on chunking parameters while running a suboptimal embedding model, you're probably working on the wrong part of the pipeline.

An IEEE benchmark study evaluating 90 chunker-model configurations across seven arXiv domains found that a sentence-aware splitter with a 512-token window and 200-token overlap outperformed breakpoint-based semantic chunking on overall retrieval scores. Domain mattered a lot: finance corpora showed the largest performance delta between strategies, while astrophysics lagged considerably. Generalizing from a benchmark that doesn't match your document types is a common source of misplaced confidence in chunking decisions.

And, lastly, Mishra et al. (2025) reported 82.5% precision for recursive chunking with TF-IDF weighted embeddings versus 73.3% for a semantic chunking baseline, but the higher-performing pipeline also included LLM-generated metadata enrichment at indexing time, which could have driven a good portion of that gap. In theory, metadata enrichment could have helped both pipelines.

None of this means chunking is irrelevant. It means that without evaluation on your own retrieval workload, benchmark results from other configurations are weak evidence for your architecture decisions.

Where Fixed-Size Chunking Degrades Retrieval

Fixed-size splitting produces systematic failures on certain content types. Tabular data gets partitioned so column headers land in a separate chunk from their rows, stripping the embedding of the schema context needed to interpret those values. Forward and backward references break when phrases like "as defined in the previous section" are encoded in a different chunk from the content they point to. On documents with frequent topic shifts, semantically unrelated content gets encoded into the same chunk, introducing noise into the vector representation that hurts retrieval precision.

Semantic chunking addresses these failure modes, but documents with low structural variation—FAQs, structured knowledge bases, standardized reports—already cluster semantically related content within fixed-size windows. For these, fixed-size splitting performs comparably while requiring no sentence-level inference at ingestion time, so applying semantic chunking uniformly across your corpus is rarely the right call.

Implementation Options

When segmenting text for retrieval or analysis, selecting the right implementation approach is crucial to balance accuracy and efficiency. Two popular methods, embedding-based threshold detection and sliding window with overlap, offer distinct advantages depending on the content type and use case, making it essential to tailor the approach to your specific data and objectives.

Embedding-Based Threshold Detection

Embedding-based threshold detection is the most widely used approach. You encode each sentence, compute cosine similarity between consecutive pairs, and insert a boundary when similarity drops below your percentile threshold.

Starting at the 95th percentile is reasonable since it only breaks at the most pronounced topic shifts, which reduces fragmentation risk. Dropping to the 75th-80th percentile increases boundary frequency and can help with long single-topic sections, but introduces more fragmentation. Validate chunk size distributions and retrieval performance on your actual data before deploying either.

Sliding Window With Overlap

Sliding window with overlap takes a different approach: adjacent chunks share a configurable token overlap rather than breaking at detected topic shifts. This reduces information loss at chunk boundaries and works better for narrative text where topics blend gradually. A 10-20% overlap ratio is typical, with the expected tradeoffs in index size and retrieval redundancy.

Content type should also drive the choice of chunking approach more broadly. Legal contracts tend to benefit from hierarchical chunking that keeps clause structure intact. Conversational transcripts, where context builds across multiple turns, are better served by sliding window overlap. Knowledge bases with atomic Q&A pairs usually work fine with fixed-size chunks since the document structure already aligns with retrieval granularity.

Ingestion Cost

Semantic chunking runs sentence-level embedding and pairwise similarity computation at ingestion time, which adds real overhead compared to splitting on token counts. A rough planning figure is a 5-10x increase in ingestion compute, though the actual cost varies significantly with embedding model size, average document length, batch size, and whether you're calling an external API or running inference locally. Profile on your own infrastructure rather than extrapolating from external benchmarks.

The practical mitigation is routing by document type: run only the content types where semantic chunking demonstrably improves retrieval through the more expensive pipeline, and use fixed-size splitting for the rest.

Making the Call

Start with fixed-size chunking at 512-1,024 tokens with a retrieval evaluation set in place from the beginning. Run it against a representative set of queries, then inspect the actual failure cases rather than relying solely on aggregate metrics. If chunk boundary placement is consistently responsible for retrieval failures — concepts split across chunks, retrieved context missing critical setup — run a semantic chunking variant on the same eval set and compare. Similar quality means fixed-size wins on ingestion cost.

Based on Qu et al.'s findings, embedding model selection and metadata enrichment at indexing time tend to produce larger retrieval quality improvements than chunking strategy does. If failures persist after addressing chunking, those are the higher-leverage variables to look at next.

| Factor |

Fixed-size |

Semantic |

| Low |

Higher — requires sentence-level inference at ingestion |

| Works when content clusters within fixed windows |

Better for documents with frequent, unpredictable topic shifts |

| Near-instant |

Adds per-document latency |

| Budget constrained, corpus is structurally uniform, or boundaries aren't causing failures |

Topic integrity matters, document types vary widely, failures trace to mid-concept splits |

Working Through This in Production

Getting chunking right is only part of the problem. Embedding model selection, index configuration, retrieval parameters, reranking, and evaluation methodology all interact with it, and tuning one component while others are misconfigured can produce apparent improvements that don't hold, or mask real gains that are already there.

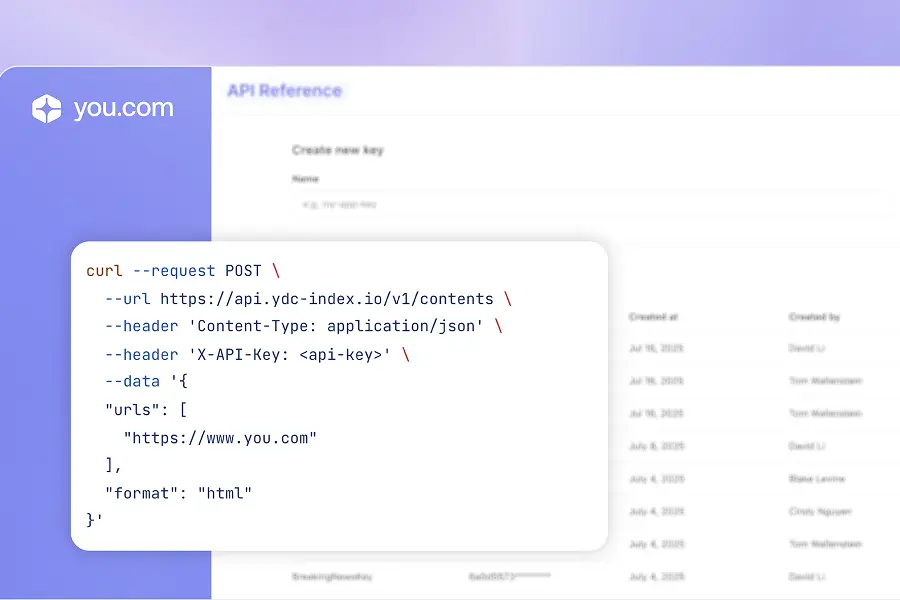

You.com works with enterprise teams on production RAG architecture: profiling your document corpus, identifying where retrieval actually breaks down in your query distribution, and building around your real content types rather than a generic template. That work spans HR policy agents, fact-checking pipelines, website search chatbots, and more.

If this resonates with your team, book a demo to see how our real-time search infrastructure fits into your pipeline.

Frequently Asked Questions

What percentile threshold should I start with?

Start at the 95th percentile, which only fires at the most pronounced topic shifts and gives you a conservative baseline before tuning. If your chunks are coming out too large for your embedding model to represent well, step the threshold down incrementally and measure the effect on retrieval quality at each step.

Does semantic chunking work for non-English content?

Most open-source implementations use regex-based sentence segmentation built for English. For multilingual pipelines, verify your embedding model covers your target languages and plan on language-specific tokenizers from the start.

What are the most common implementation mistakes?

Skipping retrieval evaluation before changing chunking strategy, which makes it impossible to attribute quality changes to the right variable. Using character offsets instead of token counts, which produces inconsistent chunk sizes relative to your embedding model's context window. Ignoring maximum chunk size constraints. And applying a single strategy across document types with very different structural properties.

Is chunking the most important variable in a RAG pipeline?

Per Qu et al. (2024), probably not. Embedding model selection had a larger impact on retrieval quality in their benchmarks than chunking strategy did. Start there, and with metadata enrichment at indexing time, before spending significant effort on chunking.

.webp)