TLDR: AI search engines combine retrieval with generation to produce synthesized answers instead of link lists. Traditional search returns ranked web pages based on keyword matching and link authority. AI search platforms, however, take a different approach: they retrieve relevant information first, then generate contextual responses. This architectural shift changes how enterprises discover knowledge, serve customers, and build applications.

While traditional search returns ranked web pages based on keyword matching and link authority, AI search engines combine retrieval with generation to produce synthesized answers instead of link lists. They analyze the context, intent, and semantics of each user query, then execute multiple searches simultaneously on the user's behalf.

The AI then sorts through these results to identify information most relevant to the user's initial query, consolidating findings into comprehensive, synthesized responses while also surfacing the most pertinent source materials.

This guide explains the technical mechanics behind each stage and includes evaluation frameworks for development teams and AI transformation leaders assessing search API providers.

The Architecture of an AI Search Engine

The architectural shift—from simple keyword matching to intelligent query expansion and contextual analysis—changes how enterprises discover knowledge, serve customers, and build applications.

This difference creates three critical capabilities that distinguish AI search from traditional approaches:

- Intent understanding: The system interprets what users actually want rather than matching literal keywords, breaking down complex queries into multiple subtopics and understanding the true information needed behind questions.

- Real-time data synthesis: Through query fan-out and parallel searching, information from multiple sources combines into coherent, complete answers instead of disconnected search results, with the AI intelligently filtering and consolidating findings.

- Context-aware responses: Results adapt to conversation history and user preferences rather than treating each query independently, ensuring responses remain relevant to the user's original intent.

The key takeaway: traditional search optimizes for finding the right document. AI search optimizes for generating the right answer.

How AI Search Engines Work: Step-by-Step

Here's what happens when a query hits an AI search engine. The system processes it through five interconnected stages that convert natural language into semantically grounded responses. Understanding these stages helps technical teams build reliable, scalable systems that deliver accurate results at enterprise scale.

1. Indexing and Crawling with AI

Unlike traditional search engines that periodically crawl web pages, AI search systems implement streaming ingestion architectures.

The indexing process follows a systematic workflow:

- Content acquisition from diverse sources, including web pages, databases, and enterprise documents

- Document chunking into semantic segments that preserve meaning while allowing precise retrieval.

- Vector embedding generation using transformer models that capture semantic meaning

- Storage optimization in specialized vector databases designed for high-dimensional data

- Implementation of Approximate Nearest Neighbor (ANN) indexing structures like HNSW algorithms for efficient similarity search

This AI-powered indexing process ensures content remains current and accessible. The system continuously updates knowledge without waiting for scheduled crawls. In other words, when news breaks at 2 PM, the index reflects it by 2:05, not tomorrow morning.

2. Contextual Understanding and Intent Detection

Someone searching for "quarterly revenue growth" and someone searching for "Q4 financial performance" may want the same information.

The system figures this out through multiple AI techniques:

- Query embedding converts text to vector representations using the same model as document indexing

- Intent classification identifies whether users seek factual information, troubleshooting assistance, or exploratory research

- Entity recognition extracts key concepts, products, people, and organizations mentioned in the query

- Query expansion adds related terms and synonyms to capture relevant information beyond exact keyword matches

- Context incorporation integrates conversation history and user preferences to personalize understanding

3. Semantic Ranking and Personalized Results

How do AI search engines find the best matches among millions of data points without sacrificing speed? Enterprise systems often use a two-step search process that first narrows down results and then finds the most relevant matches by comparing the meanings of information. They do this by turning words into numbers and measuring how close their meanings are using mathematical formulas like cosine or Euclidean distance.

Within the two-step process, Bii-Encoder models are used for efficient large-scale retrieval (step one) and Cross-Encoder models for precise re-ranking (step two), ensuring both speed and accuracy.

Personalization factors, domain-specific boosting, and diversity mechanisms further refine the results by incorporating user history, enterprise priorities, and ensuring a broad coverage of relevant information, making the search experience more tailored and comprehensive for each user.

- Bi-Encoder models retrieve top-k result candidates efficiently at scale

- Cross-Encoder models re-rank results for accuracy and precision

- Personalization factors incorporate user history and preferences

- Domain-specific boosting applies enterprise knowledge priorities

- Diversity mechanisms ensure complete coverage of relevant information

Speed and precision usually trade off against each other, but this hybrid architecture delivers both. Bi-encoders handle the initial sweep quickly while cross-encoders fine-tune the ranking for accuracy. Feedback loops track which results actually satisfy user needs, so the ranking keeps improving over time.

4. Real-Time Content Synthesis, Answer Generation, and Citation Support

This is where retrieval meets generation. Retrieved documents get formatted and injected into LLM prompts alongside the original query, and Retrieval-Augmented Generation (RAG) architecture grounds the response in factual information through five mechanisms:

- Context preparation formats retrieved content for optimal LLM processing

- Prompt engineering structures instructions for accurate, relevant generation

- Source attribution maintains document provenance throughout processing

- Response synthesis generates answers grounded in retrieved documents

- Citation linking connects each claim to verifiable sources

RAG prevents hallucinations by requiring the model to reference retrieved information rather than relying solely on training data. Citations provide transparency and allow users to verify claims by reviewing source documents. Think of it as the difference between "I think the answer is X" and "According to this source, the answer is X."

5. Continuous Learning and Adaptation

Here's a challenge unique to enterprise AI: security and compliance requirements may mandate that customer queries aren't logged or stored, which limits query-level training data. Platforms improve instead through system-level optimizations, using aggregate performance metrics like response times, error rates, and retrieval accuracy to guide better retrieval algorithms, ranking models, and citation mechanisms. The approach delivers continuous improvement while still meeting enterprise data handling standards.

Core Components of Modern AI Search Infrastructure

Now that we've covered how AI search engines work, let's cover how enterprises can go about implementing them. Four core technologies make all of this work, each solving a specific problem.

1. Search Index Architecture

Modern AI search relies on multiple specialized indices working together to deliver comprehensive results:

General Web Index

Optimized for LLM search and retrieval across the broad web, this index provides baseline coverage for general knowledge queries and ensures AI systems can access widely available information.

Vertical Web Index

Built for deep web indexing on specific topics such as categories of legal content or consumer product catalog data for retail competitive analysis. These specialized indices go deeper into niche domains than general crawlers, capturing information that would otherwise remain hidden.

Internal Private Data Index

Enterprises require secure indexing of proprietary documents, databases, and knowledge bases. Private indices maintain strict data governance and access controls while enabling AI search across internal resources.

2. Hybrid Search Capabilities

Vector Databases and Embeddings

Vector databases store and retrieve high-dimensional embeddings that represent semantic meaning. Unlike traditional databases (which organize data in rows and columns with exact match lookups), vector databases organize data in high-dimensional space with similarity-based retrieval. Approximate Nearest Neighbor (ANN) algorithms like HNSW allow systems to search billions of vectors in milliseconds, making real-time semantic search practical for production applications.

Keyword Search Index

While vector search excels at semantic understanding, keyword search remains essential for specific use cases. Running alongside vector search indices, traditional keyword indices handle exact match queries, technical identifiers, product codes, and scenarios where literal matching outperforms semantic similarity. The most effective AI search systems combine both approaches, automatically routing queries to the appropriate index or blending results from multiple search methods.

3. Large Language Models (LLMs) for Generation

Not all LLMs are created equal, and that's actually useful. Different models excel at different tasks: some prioritize speed, others prioritize reasoning depth, others optimize for specific domains like code or mathematics.

Model selection depends on what you're building. Customer support applications typically choose faster models with moderate accuracy (response time beats perfect phrasing). Research applications lean toward reasoning-optimized models where deeper analysis justifies the extra latency.

4. Real-Time Data Integration

Real-time search integration addresses static training data limitations. When models generate responses without current information access, they produce outdated answers or hallucinate plausible-sounding but incorrect information. Ask a model without web access about yesterday's stock price, and you'll see the problem immediately.

Real-time web search APIs provide current data for queries about recent events, prices, news, or rapidly changing information. This integration requires specialized infrastructure handling web-scale data retrieval within response time budgets measured in milliseconds.

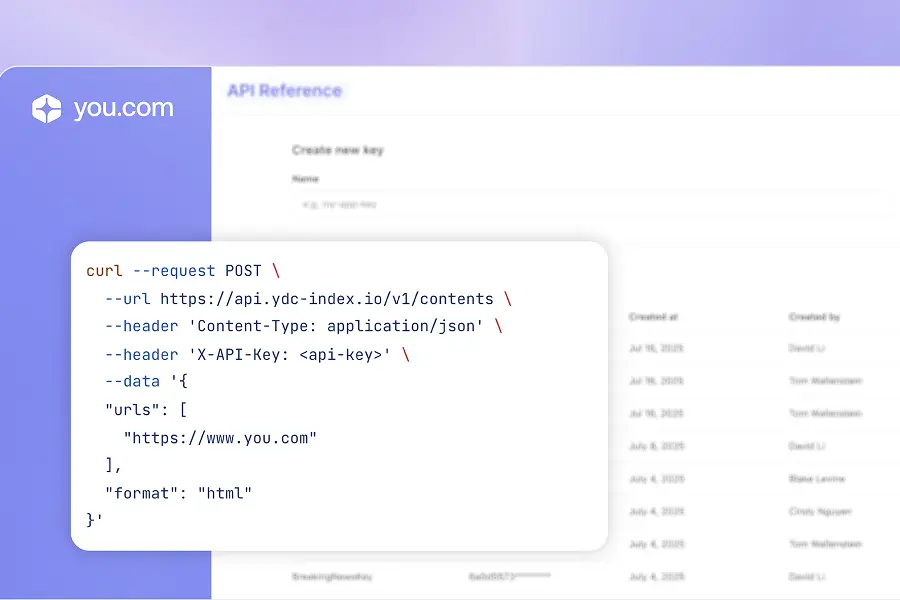

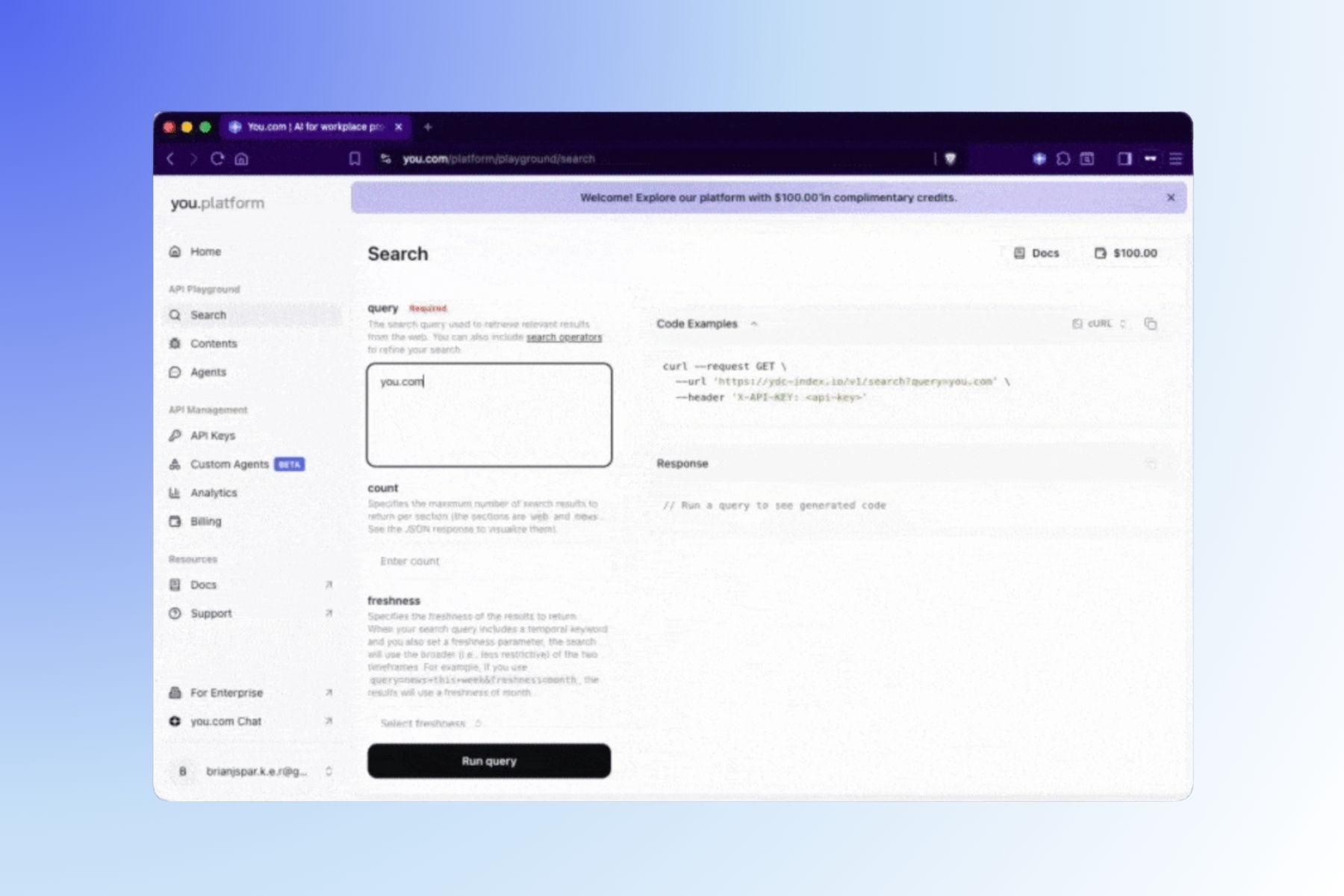

5. APIs and Integration Infrastructure

Connecting all of this to existing systems requires composable APIs with standardized interfaces. Developers can access real-time web search, news data, and custom information sources through any application stack.

You.com, for example, supports custom model routing and secure vertical indexing. Enterprises integrate specialized knowledge sources while maintaining strict data governance. The same infrastructure powers customer portals, employee knowledge bases, and analytics dashboards, with security boundaries preserved between data sources.

Improve Enterprise Search with AI

The You.com architecture balances technical power with enterprise readiness. Our platform combines general web indices, specialized vertical indices, and secure private data indexing with hybrid search capabilities that blend vector and keyword approaches. Streaming ingestion enables real-time updates, specialized vector databases power semantic retrieval, and mandatory source attribution ensures compliance.

With zero data retention options, SOC 2 certification, self-service SSO deployment, and composable APIs that integrate with existing systems, enterprises can implement AI search without the typical 6-month integration timeline.

The result: direct answers backed by relevant sources, delivered through infrastructure built for production scale.

Want to learn more? Visit You.com or get started with the Web Search API for direct-answer applications built for enterprise and developer teams.

Frequently Asked Questions

How do AI search engines work?

AI search engines analyze the context, intent, and semantics of each user query, then execute multiple searches simultaneously to gather comprehensive information. The system sorts through results to identify the most relevant information embedded across sources, then generates synthesized answers using language models while surfacing pertinent source materials. The process involves query fan-out (breaking questions into subtopics), parallel retrieval across multiple indices, intelligent filtering and consolidation, and contextual response generation with source attribution.

What technologies power leading AI search engines in 2025?

Modern AI search relies on four core technologies: vector databases with embeddings for semantic retrieval using algorithms like HNSW, multiple specialized search indices (general web, vertical web, and private data indices), large language models optimized for different tasks (speed vs. reasoning depth), real-time data integration through web search APIs for current information, and hybrid search capabilities combining vector and keyword indices. Composable architectures tie these components together for enterprise integration while maintaining security boundaries between data sources.

How can enterprises ensure data privacy and compliance when using AI search solutions?

Enterprise compliance offers zero data retention policies, SOC 2 Type II certification, and enterprise-grade authentication including SSO and RBAC. GDPR compliance demands Data Processing Agreements (DPAs) and verification that enterprise tiers prevent training use of business data.

HIPAA compliance requires Business Associate Agreements (BAAs) with specific feature limitations for healthcare data.

What is Retrieval-Augmented Generation (RAG) and why is it important for AI search?

RAG combines retrieval systems with language models to address LLM limitations including static knowledge cutoffs and potential hallucinations. RAG dynamically retrieves relevant documents from authoritative sources, augments LLM prompts with retrieved context, and generates responses grounded in verifiable information.

This architecture gives users access to current information while maintaining factual accuracy through source attribution.

What criteria should B2B organizations use when evaluating AI search platforms?

Technical teams should evaluate five key criteria: citation accuracy (critical for audit and compliance), model flexibility (to prevent vendor lock-in), integration complexity (how it fits with existing enterprise systems), security certifications (SOC 2 and industry-specific requirements like HIPAA), and deployment speed (time from procurement to production use).

Proof-of-concept testing with production-representative data validates platform capabilities against specific business requirements.

To learn more about how You.com evaluates search APIs, check out our guide, "How to Evaluate Search for the Agentic Era."

.webp)

.webp)

.jpg)

.png)